Hacking the Music Theory Classroom: Standards-Based Grading, Just-in-Time Teaching, and the Inverted Class

Philip Duker, Anna Gawboy, Bryn Hughes, and Kris P. Shaffer

KEYWORDS: pedagogy, teaching, inverted class, flipped class, standards-based grading, just-in-time teaching, assessment

ABSTRACT: This article focuses on three “hacks” to the traditional model of music theory instruction: standards-based grading (SBG), just-in-time teaching (JiTT), and the inverted classroom. In SBG, students receive multiple grades in reference to clearly defined learning objectives rather than a single grade that may mask weaknesses by averaging them with strengths. JiTT assesses students’ understanding before class so that the instructor can adjust the lesson plan according to their needs. In the inverted classroom, students acquire a basic understanding of the material outside of class so that class time may be used for active engagement rather than lecture. These tools and the technologies that support them have the potential to help strengthen curricula, increase the impact that an instructor can have on undergraduate theory students, and in some cases reduce the amount of time an instructor must devote to achieving that impact. Any one of these hacks can be incorporated within an otherwise traditional music theory course and can work together synergistically. Both their modularity and their compatibility give them potential to increase instructor efficiency and effectiveness in a wide range of class settings. After describing the scholarly literature surrounding the three hacks as they have been incorporated in a variety of academic disciplines, the authors present four testimonials describing how the three hacks have been incorporated into four diverse music programs: a traditional school of music within a large state university, a large private institution with a focus on jazz and commercial music, a department of music in a mid-sized university, and a small, private university with music classes that combine theory and aural skills.

Copyright © 2015 Society for Music Theory

Introduction

[1.1] One can argue that “hacking” is an essential element of what we do as researchers and pedagogues.(1) At its core, hacking involves taking what already exists, and then adding small or large improvements to make it work better for you. A hacker is neither wholly derivative nor wholly original; hacking entails both an indebtedness to those who have come before and a willingness to question existing methods and perspectives.

[1.2] At the 2013 meeting of the Society for Music Theory, the four of us presented three “hacks” to the music theory class: standards-based grading (SBG), just-in-time teaching (JiTT), and the inverted classroom. Standards-based grading connects student grades to specific learning objectives, provides students with direct and specific information to guide their study, and often involves less grading time for the instructor than traditional methods do. The inverted class moves information transfer and memorization outside of class time so that students’ time with their peers and professor can be devoted to more difficult, cognitively demanding tasks. Just-in-time teaching uses fast, concept-oriented quizzes (usually online) before class meetings to guide and motivate student engagement outside class, as well as gauge which concepts require more attention in class.

[1.3] None of these pedagogical techniques are our own creations. Rather, in the spirit of hacking, we have taken these three techniques, developed and rigorously tested in other fields, and applied them in our own music classes. When we have discussed these music theory pedagogy hacks with others—at SMT, regional conferences, and FlipCamp Music Theory, on Twitter, etc.—the number one response is not “show me the data,” but rather “what would it look like in my class?” A number of pedagogical studies have already demonstrated the positive impact of these hacks in a wide variety of academic fields. The question, then, is how to apply these now-established innovations in our classes, particularly in the undergraduate theory/musicianship core.

[1.4] In Part I of this article, we review the scholarly literature on these three hacks and explore their advantages for college-level students. In Part II, we describe in detail how the four of us have integrated these techniques into the core theory/musicianship sequence at four very different kinds of music programs: a traditional school of music within a large state university, a large private institution with a focus on jazz and commercial music, a department of music in a mid-sized university, and a small, private university with music classes that combine theory and aural skills.

Standards-Based Grading

[2.1] Standards-based grading (SBG) is a system of assessment and record-keeping that explicitly links course goals with assignments and provides both students and instructors with a wealth of information. In their survey of various approaches to assessment, Guskey and Bailey write that “standards-based grading facilitates teaching and learning processes better than any other grading method” because it is data-rich and provides clarity to students and instructors (2001, 91). SBG is criterion-referenced as opposed to norm-referenced (Smith 1973, O’Donovan et al. 2001), meaning that students are assessed and grades are assigned in reference to a set of standardized criteria or learning objectives, rather than according to class rank. Instead of rewarding students who outperform their classmates, a standards-based approach to assessment has across-the-board student success as its goal (Stiggins 2005). Criterion-referenced grading can also help create a supportive learning environment that encourages collaboration and peer instruction, as opposed to norm-referenced systems, which involve competition between students.

[2.2] SBG at its core involves laying out a list of learning objectives for each student—the skills and concepts that instructors, departments, or accrediting bodies want students to master during the semester or unit of study. (See Gawboy 2013 for a discussion of aligning core music-theory course standards with the musicianship standards set by NASM, the National Association of Schools of Music.) Students are then graded, based on those standards, so that final grades reflect the students’ degree of mastery over the material, and grades along the way provide students with feedback to help them achieve that mastery.

[2.3] The biggest challenges this system poses are conceptual and philosophical. Though NASM gives general guidelines for undergraduate musicianship competencies, there is no higher-education analogue of the Common Core State Standards. Thus individual instructors and departments must decide things like: What should students do to demonstrate knowledge of music theory? What are the learning goals for a given course? How should outcomes be broken down into content areas or demonstrable skills or some combination of both? And of course, how should the expectations for each standard be presented to the students in order to ensure that they have the potential to achieve them?

[2.4] The task of setting standards for a class raises seemingly simple questions such as: what should students know or be able to do at the end of the course? And what do you want your students to remember six months after your course has ended? While many instructors explicitly address these questions as they are teaching (regardless of the grading system), the use of standards-based grading makes these decisions both essential and transparent for both instructor and students (Huba and Freed 2000, 94–98). In defining the proficiencies and knowledge students are expected to have at the end of a course, instructors clarify their pedagogical priorities and ensure that those priorities are emphasized. By thinking through these issues and questions and defining standards, students and instructors gain a clear focus on the learning goals of the course (for some sample syllabi, see Appendix 2).

[2.5] One possible strategy to come up with a list of standards is to break down successful past assignments into the skills and knowledge required. It can also be helpful to have two (or more, depending on the course) larger skill categories of standards to help students organize their learning. These could be consecutive units or modules, but could also be broken down by overarching topics such as “Analysis” and “Composition/Writing.” Some of the primary advantages to a standards-based system are that it provides students with clear course goals to strive for, has an efficient method of giving feedback on work, and reveals a wealth of information for the instructor on how the class is progressing.

[2.6] From a student’s perspective, one of the biggest changes in a class using standards-based grading is that they will often receive multiple grades on each of the assignments that they submit. As opposed to being given an assignment or project that has a single score out of 100%, or a single letter grade at the top, students instead get several grades on a single piece of work.

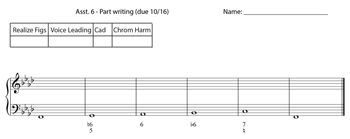

Example 1. Part-writing homework assignment with standards

(click to enlarge)

[2.7] Example 1 shows a typical part-writing assignment. The boxes at the top left of the page tell the students important pieces of information: namely, which standards or learning goals are related to this assignment. As they work, the students know that they will receive separate grades in each of the following categories: realizing figures, voice leading, writing the final cadence, and the use of a chromatic chord (in this case, the Neapolitan). These skills are a subset of the standards for the course; they are four of the fundamental goals that the students are working towards over the course of the semester.

[2.8] Importantly, each box at the top left contains an individual score that is not totaled. In this way, a student’s performance is delineated in terms of how he or she is meeting each objective. By giving a series of grades on an assignment, SBG explicitly acknowledges both what students do well and also where they need to improve. A single grade, on the other hand, represents both strengths and weaknesses, and can be more difficult to interpret. McTighe and Ferrara (1998) have noted that detailed feedback, such as that provided in a rubric, is more useful for student improvement than single letter grades (which they see as more appropriate for summative assessments). In standards-based grading, learning objectives are given more attention than grade averages, which encourages students to focus on specific skill areas. This series of scores maps out how students are progressing toward course goals, and like the use of rubrics, a standards-based system gives more detail than a single grade. Students also appreciate seeing that they did well on certain aspects of an assignment instead of just focusing on what they did wrong.

[2.9] From an instructor’s perspective, there are quite a few differences in the grading approach. Instead of counting up the number of points missed, SBG allows for a holistic assessment within each standard, which can speed up grading time. This is well suited to complex projects, such as model compositions. An instructor can give feedback on multiple aspects through the separate scores, instead of copious written comments. This is especially useful when a student has put a lot of effort into an assignment, but misunderstood a crucial concept (or even misread part of the directions). For example, instead of giving a B+ on a model composition that did everything else well, but did not include an augmented-sixth chord, an instructor can give high marks on all of the standards except for the one associated with using a chromatic chord.

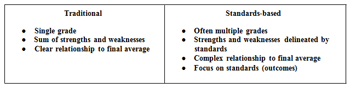

Example 2. Traditional vs. standards-based grading

(click to enlarge )

[2.10] Even at the level of a single assignment, some stark philosophical differences can be detected between a standards-based approach and a more traditional grading method. Some of the important distinctions are captured in Example 2. One apparent advantage of a traditional grading method is that it provides students with a clear idea of how individual assessments affect their final grade. SBG can make this relationship more complex depending on which model of determining final grades is used (see below). But crucially, students’ attention is shifted away from how this assignment fits into their final grade, and instead toward how and whether they are progressing in relationship to the course objectives. Getting multiple grades on an assignment forces students to think of their class progress in terms of the individual standards instead of an overall average or grade.

[2.11] SBG tracks student performance according to course goals as opposed to assessment type or chronology, giving the instructor a more useful overview of how students are moving towards the course objectives. This information can be very valuable when looking at individual student work as well as larger class trends over the course of a semester.

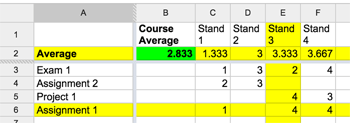

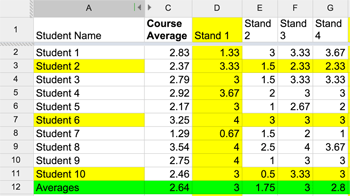

Example 3a. Sample student grade sheet in SBG, spreadsheet view

(click to enlarge )

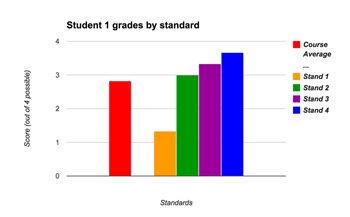

Example 3b. Sample student grade sheet in SBG, chart view

(click to enlarge )

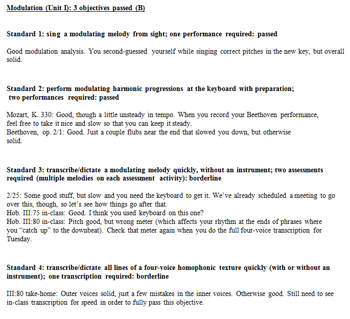

Example 4. Student evaluation with text feedback and scores of passed, borderline, attempted, not attempted

(click to enlarge )

Example 5. Class average page in SBG

(click to enlarge )

[2.12] Example 3a shows a simplified individual student page using SBG with a four-point scale. Columns C–F list the course standards (generically abbreviated Stand 1, Stand 2, etc.). Rows 3–6 list the assessments that have taken place so far in the course. Row 2 shows both the average by standard (i.e., all of the grades in a particular standard are averaged at the top of the column in cells C2, D2, E2, and F2) and the overall course average at this point in the semester (this is the total average of all of the averages by standard, shown in green in cell B2). In addition to showing how this student did on each assignment, it allows the instructor to go back and see that, for example, the student is passing standards 2, 3, and 4, but that standard 1 is posing challenges. O’Connor (2011, 58ff.) argues persuasively that separating information in this way is a much better way to represent student progress. Example 3b shows the same average information from Example 3a, only in chart form; an instructor might prefer to display information in various ways in order to allow the data to be more easily interpretable and meaningful to students.

[2.13] Example 4 gives an alternative method of presenting progress in the course to students. Notice the very different grading scale in this example; instead of numerical scores, it relies on more holistic assessments: passed, borderline, attempted, not attempted. This form of feedback is very helpful for students and particularly appropriate for outcomes or assignments that have a high degree of individual student variability.

[2.14] Example 5 illustrates how SBG can provide a more nuanced view of the entire group of students. Each assessment is given as a tab along the bottom of the spreadsheet. Columns D–G list each of the standards for the course, while rows 2–11 list the students in the course. Each row provides a student’s course average (column C) and his or her average for each of the standards (each row essentially takes the top line from the student sheet; i.e., row 2 in Example 3a). Finally, the last row (row 12) provides the class average for all standards combined and each standard individually. Averaging class scores within each standard is more informative than knowing the class average on a whole assignment. This allows an instructor to identify which concepts are causing more difficulty, and allocate instructional time appropriately.

[2.15] There are multiple approaches of determining final grades in a standards-based approach, including different grading scales and/or different weighting of standards. Two of the more common approaches include point scales and Pass/Not Pass scales. The point scale (such as a five-point scale, or the four-point scale used above) has ready parallels with the commonly used 100-point system: grades can be averaged together, and letter grade ranges are designated on the syllabus.

[2.16] When using a Pass/Not Pass system (or even a Pass/Not Pass/Plus scale such as the one described by Jan Miyake in a blog post), it is common to designate certain standards or a certain number of standards as mandatory for a given grade (e.g., students need to pass all of the standards in unit 1 to pass with a “C,” or students need to pass the unit 3 voice-leading standard to get above a “B+,” etc.). Pass/Not Pass systems allow for a simplification of the grading process and, when expectations are clearly defined, fewer grade disputes. Point-based and Pass/Not Pass systems can also be combined (for an example of this, see Appendix Example 2c). In this model, some outcomes are Pass/Not Pass, and others use a point scale. A common setup of these hybrid systems is that students have to pass a certain number of Pass/Not Pass outcomes to get into the “C” range, and from there, their numerical average determines how far above a “C” they achieve.

[2.17] Importantly, both approaches allow instructors to have certain firewalls in their grading policies. Instructors may set the number of outcomes that a student must achieve in order to pass the class, or mandate that they pass certain standards in order to get above a “C.” By tracking individual content areas, SBG can be very useful in ensuring that students are prepared for the next course in a sequence.

[2.18] One final advantage of SBG is that it allows instructors to maintain a uniform set of standards while also allowing for differentiated approaches to meeting those standards. While an instructor may continue to use the same assignments when changing from traditional grading to SBG, well-crafted standards can allow students more freedom to pursue and demonstrate mastery according to their individual goals and (dis)abilities. For example, a standard such as “I can demonstrate understanding of idiomatic voice-leading of classical cadences” could be fulfilled by an eighteenth-century model composition, a series of phrase-length figured bass realizations, an analytical paper, an in-class presentation, a prepared (or improvised) performance, or an explanatory video. Many instructors take a similar approach when they provide multiple options for a major project (such as composing an idiomatic sonata exposition or writing an analytical paper on a sonata-form movement). By articulating a list of standards that are specific to the desired learning outcomes but open-ended in terms of how a student satisfies the goal, the instructor allows for a diversity of activities without multiplying the number of rubrics required. This flexibility can help accommodate learning differences and disabilities, and the increased student choice can foster intrinsic motivation, resulting in increased student engagement with the task (Guthrie 2001).

[2.19] Of course, student-centered pedagogy does not end with articulating and assessing a set of learning objectives. In what follows, we present two further hacks that are designed to help students increase their potential of meeting these course goals.

Inverting the Music Theory Classroom

[3.1] The inverted or “flipped” classroom is a growing trend in both secondary and university-level education. In an inverted classroom, students acquire basic comprehension of a topic outside of class, then come to class to deepen their understanding and actively apply their knowledge. Despite the recent surge in media attention, this not a new model. One of the oldest educational formats, the seminar, is based on this principle, and several educational initiatives of the past few decades structure student work outside of class so that class time may be used for active engagement with the material and interaction with the instructor and peers.(2) Flipping has recently caught the attention of the mainstream media because many instructors who flip also use digital technology to deliver content, archive resources, and assess student comprehension. The Khan academy, for example, popularized the idea of replacing a long, in-person lecture with snappy online videos that students could watch at home and review at their own pace.(3) The media’s focus on technology has sometimes conflated flipping with online-only education and MOOCs (Massive Open Online Courses).(4) While both may make extensive use of digital resources,(5) a key difference is that the use of technology in an inverted classroom facilitates deeper real-time interaction between students and the instructor (Strayer 2012). In fact, the use of technology is not even necessary. It’s possible to flip using paper handouts or a textbook, provided that the materials present the information clearly enough for students to navigate on their own (Deslauriers et al. 2011).(6)

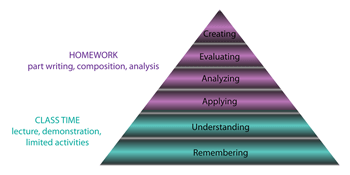

Example 6a. Distribution of learning activities in a traditional theory class, related to Bloom’s taxonomy of learning domains. Lower-level domains are located at the bottom of the pyramid, while higher-order domains are located at the top

(click to enlarge )

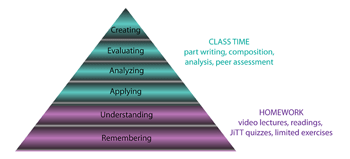

Example 6b. Distribution of learning activities in a flipped theory class, related to Bloom’s taxonomy of learning domains

(click to enlarge )

[3.2] The innovation of the inverted model is not the technology, but rather how it shifts the timing, location, and human support associated with various levels of learning. The purpose of class meetings in many of the more traditional lecture-based music theory courses is to introduce students to new vocabulary, concepts, and basic problem-solving strategies. In other words, class time is devoted to the pursuit of learning objectives occupying the lower rungs of Bloom’s taxonomy, such as remembering and understanding (Example 6a). Students are then asked to synthesize information learned in class and apply it to more complicated analysis or part-writing in their homework. The potential problem with this scenario is that students are left to their own devices just as the learning becomes more challenging and the teaching becomes more interesting. By asking students to acquire basic knowledge prior to class, time with the instructor can be used to develop fluency, improve and refine problem-solving strategies, explore more advanced topics, and engage with the material more deeply through critical discussion, debate, creative projects, and evaluation. This “inverts” the way learning activities are divided inside and outside the classroom (Example 6b). Inverted classrooms have the potential to maximize the benefits of learning within a community, where students can get help and feedback from the instructor and peers. Because class activities may change from period to period, the inverted model also offers instructors the flexibility to tailor learning activities to various student needs.(7)

[3.3] Does it work? A 2011 study compared student outcomes for two large sections (of about 270 students each) of an undergraduate physics course at the University of British Columbia (Deslauriers et al. 2011). The control section was taught as a traditional lecture with a recitation, labs, and homework outside of class. In the experimental section, students spent class time discussing pre-assigned readings and solving problems while receiving formative feedback from the instructor and peers. The researchers found that students in the experimental group attended class more regularly, were more engaged, and achieved dramatically higher test results than the control.(8) A three-year study concluded in fall 2013 at the University of North Carolina showed that doctoral pharmacology students’ scores on a final exam improved 5.1% over a two-year time period using the inverted model. In a post-course survey, 91% percent of the students reported that inverted teaching had enhanced their learning experience (McLaughlin et al. 2014). Other studies have also reported that students in inverted classrooms perform slightly better than their peers on assessments that gauge acquisition of course content (Bishop and Verleger 2013). However, such assessments may not capture other skills the inverted model can develop, such as the ability to collaborate, and enhanced student engagement with their own learning process (Strayer 2012).

[3.4] Brame (2013) outlined several critical elements for making this model a success. First, students need access to clear, understandable introductory materials that they can navigate on their own. Second, there must be some incentive for students to engage with these materials outside of class, such as a point system, low-stakes quiz, or a classroom environment where everyone is genuinely accountable for preparing. Third, there must be a mechanism to assess basic student understanding before starting activities. If areas of weakness exist, flexibility must be built into the lesson plan so that they can be addressed. Fourth, class activities must be structured so they engage students in developing a higher-level understanding of the material. The inverted classroom model could potentially fail at any one of these points: obtuse, confusing introductory materials, online lectures that are overly long and detailed, lack of incentive for students to prepare for class, lack of instructor’s response to assessments, or classroom activities that merely reiterate out-of-class material and turn class time into drudgery.

[3.5] If some of the content is delivered outside of class, what happens in class? It seems that there are as many different answers to that question as there are class periods. The inverted model has the potential to promote deeper learning as students apply theoretical knowledge to listening activities, composition projects, in-class performances, and discussions of analytical or aesthetic issues. Perhaps most importantly, the model can provide a framework to employ pedagogical techniques that, research suggests, promotes student engagement, such as inquiry-based learning, collaborative learning, and peer instruction (Crouch and Mazur 2001; Shaffer and Hughes 2013). The inverted class makes it possible to structure student work explicitly around the very skills that are essential for professional success. NASM guidelines suggest that undergraduate music students demonstrate “the capability to produce work and solve professional problems independently,” “the ability to form and defend value judgments about music, and to communicate musical ideas, concepts, and requirements to professionals and laypersons,” and the ability “to work on musical problems by combining

[3.6] When implemented successfully, the flipped model has the potential to help remediate one of the perennial problems of core music theory: the need to cover wide-ranging content and develop depth of skill within a compressed two-year course sequence. It does so by putting student (rather than instructor) activity at the center of class, empowering students to take charge of their own musical growth and connect their study of theory to a variety of other musical activities.

Just-in-Time Teaching

[4.1] One of the most common questions asked about inverted pedagogy is: How do you ensure your students prepare for class? Since inverted classes require students to learn introductory material on their own, ensuring that students put forth the effort to prepare this material is essential to its success. Students are often loath to prepare for a lecture ahead of time, especially if the stakes are low. Skeptics of the inverted classroom may question whether students can be trusted to engage with the fundamental content of a lesson without direct supervision.

[4.2] On the other hand, how does one know that students are retaining the most pertinent information from a lecture? Furthermore, how can one ensure that a lecture efficiently addresses the parts of the topic that are the most conceptually troublesome for students? Seasoned teachers will interact with their students throughout the lecture and are often quite good at gauging students’ understanding of a concept in real time. Even so, most teachers would enjoy the benefit of having insight into students’ difficulties prior to the beginning of class. Just-in-time teaching offers one means of acquiring such insight.

[4.3] Just-in-time teaching, a term coined by Gregor Novak (Novak et al. 1999), often functions by giving students a brief task, such as a reading assignment or video lecture, followed by a short online quiz, to be completed and submitted between class meetings. The instructor uses the quiz results to gauge student understanding of a topic, and then prepares the upcoming class accordingly so that it will address any common weaknesses and/or misunderstandings. In-class time is likely to be more focused on where the students most need help, and also more efficient, since the lesson does not need to cover aspects that students already grasp. This can often result in more time to spend on in-depth activities that promote deeper engagement with the material.

[4.4] In addition to showing instructors a snapshot of how a class is learning material, JiTT assignment data can provide a rough means for measuring the efficacy of new pedagogical techniques that one has tried. Teachers can also check on overall student preparation and retention of previously-learned material. Lastly, JiTT has great advantages for instructors overseeing multiple-section courses. JiTT assignment data gives the coordinator a window into all of the sections, which can be extremely useful when there is little time available for teaching observation.

[4.5] Until recently, JiTT has been employed primarily by instructors of large classes with a long tradition of lecture-based content delivery. In courses that typically only evaluate students with one or two exams, researchers including Jonathan Kibble (2007) and John Dobson (2008) have shown that formative assessment through unsupervised online quizzes results in significant gains for summative assessment. Additionally, Kibble and Dobson suggest that online quizzes are valid predictors of exam performance. Though JiTT is more involved than the simple use of online quizzes, these results show that an online quiz platform can be instrumental in facilitating learning. In contrast, Mark Urtel et al. (2006) performed a study that examined the efficacy of outside-of-class quizzes and found that they do not lead directly to higher test scores. While online quizzes increased student engagement and enjoyment, Urtel et al. found that the quizzes did not have a statistically significant impact on students’ academic performance.

[4.6] It is difficult to weigh the findings of Kibble (2007), Dobson (2008), and Urtel et al. (2006), since none mention the degree to which their quizzes altered their in-class activities. If a course consists entirely of passive lectures and exams, surely any injection of student-centered activity could yield positive results. On the other hand, adding a self-paced, self-assessed online quiz component to an already active classroom may have minimal impact. Unsupervised, automatically-graded quizzes certainly provide the students with some useful feedback, but by using these quizzes as JiTT assignments—that is, by using the assessment data to tailor the classroom experience—the quizzes can provide much more benefit to the students. If an instructor ignores the assessment data, the essential pedagogical function of JiTT is lost.

[4.7] If the fundamental pedagogy of JiTT involves using recently gathered information to influence the activities that occur in a class, then a related pedagogical technique could be to formally gather this information during class. In a traditional lecture-driven organic chemistry class, Kelli M. Slunt and Leanna C. Giancarlo (2004) compared the use of JiTT quizzes with “ConcepTests.”(10) ConcepTests consist of ungraded, multiple-choice quizzes given at crucial stopping points during the lecture. The ConcepTests were decidedly “low-tech”: students held up cards to indicate answers, after which the instructor discussed the question with the class (one could also use clickers for this kind of activity). When the class appeared to be mostly divided on a question, the instructors directed students to turn to their neighbors and debate their answers, thus relying on peer instruction. For comparison, Slunt and Giancarlo assigned JiTT quizzes comprising a combination of drill and preview material to students in another section of the same class. Their findings showed that the average exam scores of students taking JiTT quizzes throughout the semester were significantly higher than those that were only offered ConcepTests and those that only heard lectures.

[4.8] The ConcepTests used in Slunt and Giancarlo’s experiment were not without merit. In their study, this approach was not as effective as JiTT, but both strategies (JiTT and ConcepTests) had a significant positive impact on students’ success on final assessments. The core tenets of ConcepTests and peer instruction are: 1) concepts are best explained by those who have recently mastered them, and 2) explaining a concept to a peer facilitates a deeper understanding of that concept for both parties involved. As Eric Mazur and Jessica Watkins (2009) have shown, peer instruction works best in tandem with JiTT. ConcepTests are most successful when they can be tailored specifically to students’ needs, and this tailoring is made possible by assigning JiTT quizzes between classes. Both Crouch and Mazur (2001) and Mazur and Watkins (2009) report that peer instruction and JiTT on their own will produce better results than a traditional lecture. However, when combined, they produced the greatest positive impact on students’ conceptual assessments.

[4.9] Because JiTT provides the instructor with information to help tailor the upcoming lesson, it is important to establish a consistent and reliable means of sharing materials between the students and the instructor in a timely manner. Paramount to the success of JiTT is that students complete these tasks with enough time for the instructor to make changes to the lesson plan. Quizzes could be done without technology by simply having students leave paper copies in the instructor’s mailbox. Email response is another relatively low-tech solution that requires very little setup. Alternatively, students could even complete a short quiz at the beginning of class that the instructor could scan quickly while the students work on another task (much like the pedagogy behind ConcepTests). Online quiz platforms, such as those provided by learning management systems, provide a very convenient means for successfully implementing JiTT. While the earliest versions of such software only allowed multiple-choice question formats, current platforms offer numerous sophisticated response modes. With the development of web-based notation software, we may soon even be able to incorporate responses requiring music notation into online quizzes. On the other hand, JiTT doesn’t require complex response modes to be successful. Even multiple-choice questions provide the instructor with substantial information to assess the basic understanding of many music-theory concepts. Notation-based responses can be left for class meetings and assignments.

[4.10] JiTT is a pedagogical approach that has great utility even when used in a traditional lecture-based, classroom setting. Nevertheless, it also functions beautifully in tandem with an inverted classroom approach to teaching. More than anything, JiTT helps to dispel the biggest fear of those most skeptical of inverted pedagogy: trusting students to prepare content outside of class. When properly incentivized, JiTT assignments ensure that students prepare your inverted content. The data drawn from these assignments can then be used to help you tailor and organize your classroom activities to make your inverted session as efficient and effective as possible for your students.

Part II: Introduction

[5.1] What does a hacked music theory class look like in practice? How does an instructor take the pedagogical insights of scholar-teachers from a wide variety of disciplines and apply them in a core undergraduate music theory course?

[5.2] Any of the three hacks discussed in this article can be incorporated into an otherwise traditional class environment. JiTT quizzes can be used to motivate student engagement with a reading prior to lecture, and gauge which concepts need the most attention in that lecture. Standards-based grading can be applied to traditional homework assignments done between lectures. And inverted-class techniques can be used with traditional grading schemes and can employ assessment methods other than JiTT quizzes to verify student understanding.

[5.3] However, these three techniques also work rather well together. Standards-based grading provides students with summaries of their progress toward specific learning objectives, in order to better direct those students as they seek to fulfill those objectives, and to help instructors better support their progress towards those objectives. Likewise, just-in-time teaching gives instructors information about student understanding so that class activities can be designed around student needs rather than a pre-determined progression through course content. And the inverted model uses class time for the activities that are most important—or most difficult—for students as they pursue their learning objectives. Employed together, these three hacks to the traditional classroom can make significant progress towards encouraging the development of active, engaged, independent learners.

[5.4] There are many specific ways in which these three hacks can be incorporated and combined in a college-level music theory course to the benefit of student learning. What follows are four “testimonials” in which we explain the specific ways we have hacked our own music theory and musicianship courses. We have incorporated SBG, JiTT, and the inverted class in various forms at four very different institutions, and we present here the benefits—and struggles—that have resulted.

Online Lectures and Inverted Classrooms at Ohio State: How I Became My Own TA (Anna Gawboy)

[6.1] I heard about inverted or “flipped” classrooms in the fall of 2011, halfway through my second year as coordinator of the freshman music-theory curriculum at Ohio State University. I immediately recognized the model’s potential to help alleviate some of the challenges I faced coordinating a large multi-section freshman course. Though my initial attitude was entirely pragmatic, my transition to an inverted classroom led to benefits and challenges I did not anticipate. It fundamentally changed the way I interacted with my students and caused me to rethink some basic assumptions I had about teaching music theory.

[6.2] The freshman theory class at OSU is divided into 5–7 sections of 15–20 students meeting three hours per week (aural skills meets twice a week and is coordinated separately). Like many undergraduate theory programs situated within a large university, we have two fundamental missions. The first is to teach music theory to our undergraduate students, and the second is to prepare our graduate students for future jobs teaching music theory. Graduate teaching associates come from a range of disciplines including music theory, music cognition, composition, and performance. In any given year, my cohort may include Master’s students who are teaching for the first time, seasoned PhD students who are ready to become professionals, and everyone in between. GTAs teach the majority of our theory sections, and without a large lecture, curricular coordination was a challenge. Before we moved to the inverted model, some sections would get ahead of the course trajectory while other sections would fall behind. In rare cases, this was the result of individual instructors responding to the unique needs of his or her section and taking appropriate measures to meet the learning goals. More often, it was the result of an instructor skimming over foundational curriculum to get to the “fun stuff,” or belaboring a single topic at the expense of others. On course evaluations, undergraduate theory students reported that they wanted a more uniform approach to course content and pacing among sections.

[6.3] Despite the perennial challenges of coordination, the independent sections allowed us to keep our class sizes small. This was intended to promote greater student/teacher interaction and facilitate more hands-on learning,(11) but during my first year, I observed that the instructors spent the majority of class at the front of the room presenting topics, not interacting directly with their students. Given the different levels of teaching experience among instructors, it was not surprising that the lectures ranged in quality and effectiveness. When the class did break into an activity, the students only had time to work with the most basic concepts before class ended, sometimes leaving students unprepared for a more advanced assignment. An inverted course design promised to help us standardize the curriculum across sections, enable us to devote more class time to advanced work, and convert the heterogeneity of instructors from a weakness into a strength.

The Flip

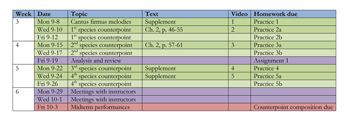

Example 7. Sample Theory I counterpoint module

(click to enlarge )

[6.4] I decided to flip all sections of freshman theory in a two-week pilot at the beginning of the 2012 winter quarter. Despite some initial technological and organizational glitches, the student feedback after the pilot was overwhelmingly positive, so we continued for the rest of the year.(12) After a while, the course settled into a pattern (Example 7).

[6.5] Students watch one or two videos a week. For each video, students complete a short worksheet before they come to class to help them review terminology and practice basic part writing, composition, and analysis skills. Although the worksheets are the most numerous type of assignment, they make up only 10–15% of the final grade. Class time is spent reviewing as needed and going into greater depth in order to prepare for cumulative assignments where students synthesize what they’ve learned in more comprehensive tasks. We take a break from this routine several times a term for more creative learning activities such as composition/performance projects, student presentations, and analytical writing assignments.

Videos

[6.6] Many of my video lessons fall into two parts: a short lecture covering the basics of a given topic followed by a demonstration of selected writing or analysis exercises (see Appendix 1b). During the demonstration section, I’ll walk through a series of steps, asking students to pause the video and fill in something on a practice worksheet before providing my answer (see Appendix 1c). This gives the students immediate feedback as to whether or not they’re on the right track and helps keep them engaged. Video lectures allow students the opportunity to learn the material at their own pace. They may pause the video to take notes, review as needed, and fast-forward through topics they already understand. Archiving videos online enables students to preview the week’s topic over the weekend when they may have more time and re-watch certain videos before a high- stakes exam or project.

[6.7] My initial foray into online lectures was not without mistakes. I had to curb my instinct to over-teach online. From student feedback, I learned that videos seem to be most helpful when they provide a bare-bones introduction to a topic that will be fleshed out and further contextualized in class. Many screencasting guides propose 5–10 minutes as the ideal length for an online lecture (such as Scagnoli 2012). More limited topics could be covered in even less time, while complex topics could be broken into separate videos covering more digestible chunks.

[6.8] While the vast majority of my students appreciate the advantages that online videos offer, there are always some students who feel they simply cannot learn “from a computer.” I usually assign handouts or textbook readings that summarize the main points and provide some extra topics to explore in class, which everyone can use as a supplement or quick review. The video lectures help us unify our approach to course content across sections and provide an opportunity for greater creativity among individual instructors. In a sense, it brought our curriculum closer to a general trend described by Marvin (2012, 256) toward a lecture-recitation design where faculty members present the majority of the material and graduate assistants help students apply the concepts in smaller groups. With my lectures placed online, I’ve become my own TA.

What Happens in Class?

[6.9] Studies on inverted classrooms and other forms of education involving both in-person and online instruction have emphasized that work done in class must align with and build upon work done outside of class for the model to be successful (Strayer 2012, Ginns and Ellis 2007, Elen and Clarebout 2001). In my own classes, I usually spend the first 5–10 minutes asking students to self-assess or peer-assess selected problems not completed on the video. I might give out an answer key for analysis sections or ask students to develop their own rubric to reveal part-writing errors. I ask students to circle their mistakes, correct them if they have time, write me a note indicating what is still conceptually unclear, and self-identify persistent errors they think they need to work on. During this time period, I walk around and check in with everyone, answering individual questions as needed.

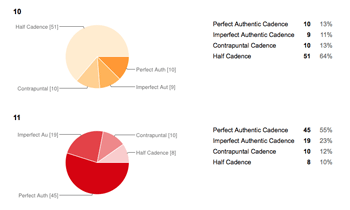

Example 8. Sample student-centered classroom activities

(click to enlarge and see the rest)

[6.10] I then have about 40–45 minutes of class time remaining. If the students need it, we can review and practice basic skills, but the inverted model seems to work best when we’re able to spend most of the class on activities that get students to synthesize and apply their knowledge in new ways in order to prepare them for future assignments of a more advanced nature. Since core content is presented outside of class, my GTAs also have the freedom to experiment with different teaching strategies during this period of their professional development. The less-experienced GTAs tend to use the activities I share with them, while the more experienced ones develop their own materials. Example 8 provides some descriptions of class activities my teaching assistants have designed and successfully implemented in class. They exhibit a student-centered approach in that they reach beyond the simple acquisition of content towards more comprehensive learning outcomes: the ability for students to solve problems independently and reflect on their own learning, the ability to teach and learn from one another, the ability to assess and evaluate their own work and that of their peers, and the ability to engage musically with course content.

[6.11] Although I had been using active and collaborative learning techniques with my own students for years, the inverted model allowed me to structure my class time more effectively. For example, I used to feel pressure to wrap up an exercise after the majority of students had finished, forcing the slower students to stop even though they were the people who could potentially benefit the most from continued work. Now I build my learning activities in multiple levels so that the more fluent students in the class can transition to a higher-level task while the others catch up. I used to feel rushed debriefing an activity, which usually occurred right before the end of class. Now, there’s often enough time to include a self- or peer-assessment period after the activity has concluded to help students reflect on their learning process. More importantly, however, the inverted model has changed the way class time is used across sections. Before we inverted the course, I sometimes had trouble convincing all graduate instructors to devote time to letting students work on problems in class. Now, active learning is the main purpose of class.

[6.12] One persistent question frequently raised in the literature is how the inverted model changes student learning outcomes. Deslauriers (2011) achieved dramatic short-term results, while McLaughlin et al. 2014 indicated more modest long-term gains. Although these investigations suggested a positive effect for the inverted model, both used standardized tests to assess acquisition of basic content. However, such assessments cannot capture how learning priorities might change between a lecture-based course and an inverted course. I used to give a comprehensive, paper-based final exam at the end of every term, constructed around the stock-and-trade Theory I exercises (Roman-numeral analysis, harmonization, and figured-bass realization). This exam always made me somewhat uneasy because I realized that its primary purpose was not to assess student learning, but rather to enforce standardization of content across sections. In the inverted version of the course, videos and practice assessments standardize basic content, which allows students to demonstrate more creative and synthetic skills on the final exam. The theory I final exam was split into three different assessments: a timed fundamentals quiz, a take-home part-writing exam that students bring back to class to perform on voices or instruments, and a prepared five-minute analytical presentation/interview based on a composition each student had performed that term in private study (see Appendix 1 for sample materials). In the second semester, we expanded the five-minute interview into a ten-minute class presentation. Students showed their synthesis of harmonic vocabulary through the free written generation of chord progressions, their ability to independently apply key concepts to repertoire beyond the pieces studied in class, their verbal communication skills regarding matters of musical structure, and their ability to reflect on their own learning.

[6.13] One of my biggest challenges in implementing the inverted method was that I was simultaneously learning a new model and trying to mentor other instructors on how to use the model at the same time. Prior to the curricular change, my primary concern was uniformity of content delivery across sections. As a result, my weekly GTA meetings would be structured as a top-down discussion focused mainly on content. Transitioning to an inverted model shifted the focus of our conversation more towards teaching strategies. Each week we shared the stresses, concerns, and successes of our classroom experience and learned from each other’s victories and mistakes. Teaching in an environment where every strategy was open to trial and interrogation inspired many to experiment with more creative approaches tailored closely to their developing teaching styles. My graduate teaching associates have taken on an active role in shaping the course, and their feedback and insights have proven invaluable as we’ve moved forward.

[6.14] For me, the introduction of online videos caused me to reconsider the purpose and value of gathering together in a single place with other people for class. Moving lectures online deepened the interactions I had with my students, changed the way they interacted with each other, and allowed me to help them reflect on their learning. My understanding of the curricular implications of these changes is evolving as I gain experience with the new model. One such implication is that a basic facility in part writing and analysis—which, for practical reasons, is often treated as the end goal of classroom theoretical instruction—more readily assumes its proper place as a means for more critical and creative application.

How I Teach Twelve Sections of Music Theory at Once (Bryn Hughes)

[7.1] At the University of Miami I serve as course coordinator for both freshman and sophomore written music theory. Traditionally, both freshman and sophomore theory are taught in sections of 15–20 students each, and in most semesters the enrollment requires six sections of each class. These sections are primarily taught by graduate teaching assistants drawn from our DMA composition program. In addition, I teach two sections of each class. Though such a high teaching load presents a challenge, many of the pedagogical approaches discussed in this article have allowed me to manage this work effectively.

[7.2] The flipped model has offered an opportunity to ensure that the message being delivered to the student body is relatively consistent. Instead of having my TAs independently deliver lectures to their individual sections, I assign readings and/or video lectures in preparation for an upcoming lesson. While preparing videos and online quizzes is certainly a lot of work, thus far it has not been much more work than preparing lectures in the traditional way. Moreover, it ensures that students in sections other than my own are still receiving the same content as those in my sections.

[7.3] To ensure that students are adequately engaged with material presented to them online, I assign just-in-time teaching quizzes based on my plans for the upcoming class. Preparing JiTT quizzes certainly takes time, but this investment often allows for time to be saved that would otherwise be spent grading large stacks of papers. When designing JiTT quizzes, my main purpose is to gather a rough measurement of students’ understanding of the material in order to be more adequately prepared for the upcoming class. I have employed a range of different formats and lengths of JiTT quizzes.

[7.4] An additional benefit of JiTT is that it provides me with a window into sections of the class that I do not teach. I am able to assess the struggles of all of the students in the freshman and sophomore classes. TA meetings are no longer spent reviewing what went wrong in the previous week’s lectures, but rather discussing how to approach the upcoming classes based on the assessment data provided by the JiTT quizzes. I am able to offer specific, tailored advice to my TAs on how to address problems before they become major issues.

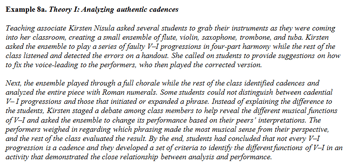

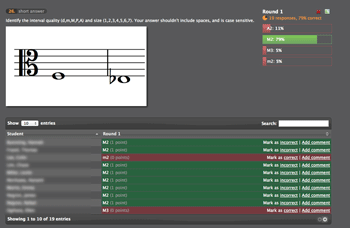

Example 9. JiTT quiz and assessment data

(click to enlarge)

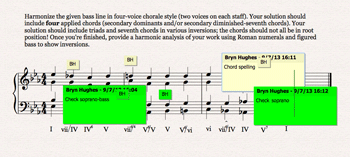

Example 10. Part-writing homework, completed in Sibelius, submitted online, and graded in Sibelius

(click to enlarge)

Example 11. Workbook assignment, completed by hand, submitted online as a PDF, and graded on the iPad using iAnnotate

(click to enlarge)

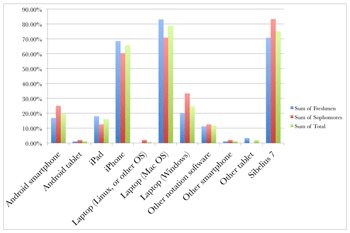

Example 12. Students’ ownership of technology

(click to enlarge)

[7.5] Example 9 shows some sample data from a JiTT quiz on cadences. On this quiz, students listened to single phrases and identified the cadence that concluded each phrase. The breakdown of responses for this question suggests that my students are having slight difficulty recognizing the difference between imperfect and perfect authentic cadences. Perusing this information before class, I decided to review these differences briefly before going into more deeply engaging activities, such as writing and performing these cadences in four-part chorale style.

[7.6] Reconsidering my approaches to grading and assessment has also allowed me to better manage my classes. I have yet to commit to a full-fledged standards-based grading system; however, I do use some of the same tactics to lighten the grading load while maintaining the educational benefit for our students. Although I still use paper-and-pencil quizzes, whenever possible I test fundamental skills in an electronic format to allow for automatic grading. Students are required to take these quizzes multiple times until a standard grade is achieved (typically 90–93%); this is to ensure a level of mastery. Larger tasks, such as traditional assignments, are assessed holistically in categories instead of by a complicated points-based rubric. Papers are graded intentionally vaguely, so that students can read through their assignments, fix errors, and resubmit for a higher grade. Furthermore, since much of the content delivery is moved outside of class, students are given the opportunity to begin traditional homework tasks in class with the instructor’s supervision before submitting their assignments online outside of class.

[7.7] Electronic submission of assignments is accomplished in a number of ways. The University of Miami requires its incoming music students to purchase notation software, which I take full advantage of for assignments that require them to do some kind of model composition (see Example 10). But even for handwritten assignments, such as annotated scores or form diagrams, the technology available to them is so prevalent today that it does not hinder their ability to submit assignments electronically. For handwritten assignments, I ask students to submit PDF copies of their work, by way of a scanner or smartphone app (see Example 11).

[7.8] One might think that it is unreasonable to expect all of our students to have this technology available to them. I asked students to complete a survey about the technology and software that they own on the first day of class. As Example 12 shows, over 85% of my students have some kind of smartphone, and nearly all of them have a laptop. Those without these items can use the scanning function of the ubiquitous photocopiers located throughout the university campus. Alternatively, if the instructor teaches at a university where technology is much less available, technology-heavy assignments could be completed in the university computer lab, or in pairs or groups. Both Trevor de Clercq (2013) and Kris Shaffer (discussed in section [8]) have reported a similar ubiquity of technology at their respective institutions, and I suspect the same could be assumed at many colleges in North America.

[7.9] The flipped-classroom strategy has afforded me time to offer my students the opportunity to begin, and sometimes complete, their “homework” in class. Moving written work to the classroom allows me to have greater control over this process, a greater presence and availability to my students during the most difficult stages of learning, and to instill confidence in my students’ abilities to complete the remainder of the assignments on their own outside of class. It allows for any common issues to be addressed before the assignment is completed, and it allows the students to learn from each other (instead of simply copying each other’s work). All of these factors lessen the grading burden for me and my TAs once the assignments are officially submitted. Finally, as students submit these assignments electronically before class, it allows me to use those assignments to frame upcoming class activities—another form of just-in- time teaching.

[7.10] Moving content delivery outside of class has also allowed me to more frequently implement peer instruction during class time. This can be done in myriad ways: using a class response system, clickers, colored index cards, or even more informally through in-class activities or question-and-answer sessions. In particular, using a class response system has revealed two benefits of peer instruction in addition to those already discussed above. The first is that it all but guarantees full-class participation. More outgoing students are less able to spoil the experience for others by blurting out answers before everyone gets a chance to think. Conversely, I am able to monitor responses from the entire class closely and call upon any student not participating to do so. The second benefit is that it gives me another means of verifying the quality of the content in the sections I am not teaching, and helping the TAs better organize their classes.

Example 13. A sample question from Learning Catalytics, delivered in real time during a class on intervals

(click to enlarge)

[7.11] I design the peer instruction tasks and my TAs simply copy the peer instruction modules for their respective classes. Like the JiTT quizzes, if I am curious about monitoring the sections I do not teach, I can go through the peer-instruction responses after they have been completed and adjust my overall course strategy accordingly. Example 13 shows the “instructor view” in the class response system Learning Catalytics from a question given during a recent class on intervals. For this particular question, students saw the graphic showing the interval, along with a text box into which they could input their answers. As the answers are submitted, the teacher view updates to show both the individual student responses and the overall class performance. Depending on the results, the instructor can choose to pause to re-explain a concept, suggest that students try to instruct each other, or move on to a more challenging concept or exercise.

[7.12] These hacks have allowed me to handle a large teaching load and maintain consistency across multi-section courses taught primarily by TAs. By utilizing these pedagogical tools, I have freed my TAs to focus more on being effective teachers. Most importantly, these techniques benefit my students, helping them to master important musical concepts and skills, and helping them to take ownership for their own musical development.

Flexible Musicianship (Kris Shaffer)

[8.1] Charleston Southern University is a moderately small, teaching-focused university with a small department of music (13 full-time faculty and just under 100 undergraduate music majors). During my second year at CSU, we combined courses in music theory and aural skills into a single musicianship course sequence. All musicianship courses met five days per week, for a total of six hours of class time in the keyboard and computer lab, where every student had access to a piano keyboard and a computer. As the sole faculty member responsible for the musicianship courses, I taught three courses per semester, totaling 18 hours per week in the classroom.

[8.2] This environment created significant challenges, as well as amazing opportunities. The heavy teaching load (effectively a full-time teaching load plus an overload each semester equivalent to two 3-credit-hour courses) created a need to minimize grading and class preparation as much as possible without sacrificing the quality of instruction. Meeting five days per week prohibited me from employing just-in-time teaching as described above: there was not enough time between successive classes for students to do a reading or watch a video and take an online quiz, and then for me to use the results of that quiz to plan class activities for the next morning’s meetings. On the other hand, meeting several hours per week in front of piano keyboards and computers in a class where theoretical and aural instruction were combined was a wonderful opportunity to foster active student engagement with music. It also helped us integrate musical performance, composition, analysis, and listening conceptually and in practice.

[8.3] In this environment, I found that a combination of the inverted classroom (particularly the inquiry-driven model), standards-based assessment, and peer instruction (with a modified version of just-in-time teaching) helped me to increase student learning while simultaneously reducing my out-of-class preparation and grading work. Following are examples of how I employed these hacks in the context of notation fundamentals and species counterpoint.

Fundamentals

[8.4] The first semester of CSU’s musicianship sequence focused primarily on laying a solid foundation in aural skills, keyboard skills, and notational proficiency before proceeding to more complex topics later in the course sequence. To support this, I employed a standards-based assessment system that focused student attention on mastering those foundational elements before moving on to the more advanced concepts.

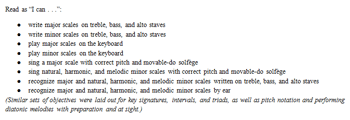

Example 14. Subset of learning objectives for Musicianship I, fundamentals unit

(click to enlarge)

[8.5] I provided students with a detailed list of specific course objectives relating to notation, keyboard performance, vocal performance, solfège, and aural recognition of musical structures (see Example 14). A student passed an objective when he or she performed perfectly, or nearly so, on an assessment task. Performance tasks included standard sight-singing exams or quick checks at the keyboard in class. Notational objectives were assessed via brief timed quizzes, where students were asked to write or identify notational elements in roughly three times the duration needed for me to perform the same tasks. Typically, multiple course objectives were “open” at any time, allowing students to self-direct their study to a significant—but limited—extent, and choose when to assess which objectives. I generated an online assessment report daily that included the list of course objectives and noted which objectives had been passed (with dates), attempted (also with dates), or not yet attempted. Final grades were determined not by average performance on all assessments performed, but by the number of objectives passed by the end of the semester. In class, I regularly informed students roughly where they should be in their assessment progress in order to achieve the final grades desired.

[8.6] Class time did not consist entirely of assessments. In fact, many of the notation quizzes were given online, outside of class. This freed up class time to delve into more difficult conceptual issues, as well as spend time on more challenging performance and listening tasks rather than notational basics. Like the inverted class more generally, this puts simpler cognitive activities—those lower on Bloom’s taxonomy of learning—outside of class, allowing class time to be devoted primarily to more complex cognitive activities, which are higher on Bloom’s taxonomy (see Example 6 above). Additionally, holding class in the keyboard/computer lab meant that during times when individual assessments were performed (primarily vocal and keyboard performance), other students were at work either on an assigned task or preparing for their own upcoming assessments.

[8.7] Having multiple quizzes open at any time and having a fair degree of freedom for in-class activities meant that Musicianship I involved a significant amount of student self-direction, a common goal of the inverted class and student-centered pedagogy more generally. For instance, a basic concept like scales or key signatures needs no direct instruction in class. Some students need basic information available in any theory or aural skills textbook, and most need time to memorize and/or increase their speed at recognition or writing. Thus, we spent 20 minutes of class on writing quizzes for major key signatures (recognition quizzes could be taken online), and no time for instruction or discussion; students drilled themselves or each other outside class in preparation for the quizzes. At the end of the first week, every student but one in the two sections of Musicianship I had passed that objective, along with several others. Further, and more telling, when those students began work on sight-singing, they were consistently faster and more accurate at recognizing keys and meters of melodies sung in class than their Musicianship III colleagues who had experienced a more traditional lecture–worksheet–quiz approach the previous year.

[8.8] Though much of the fundamentals unit was self-directed, there were structured class activities as well. One fundamentals topic which required significant structured activities in Musicianship I was intervals—a topic that typically causes some conceptual confusion for students and that rarely is covered by private or ensemble instructors prior to college. I provided an online, written resource on intervals (now part of Open Music Theory) for the students to read prior to class, and the first class session was devoted to a peer instruction session using ConcepTest “clicker” questions, as discussed in this article (par. 4.7ff.).

[8.9] The ConcepTest session on intervals played out as follows. (See this blog post for a description of how I use this same technique for the study of musical form.) I presented students with a series of notated intervals. Students identified each interval using the class response system, which provided me with real-time data about student understanding. If the students were generally correct, I confirmed the correct answer with a brief explanation and went on; if generally incorrect, I used the response data to ascertain what the largest misconceptions might be and then provided a mini-lecture on those misconceptions. For example, if students largely analyzed the interval C–A as a fifth, I would remind them of the convention that diatonic intervals (both generic designations like “sixth” and specific designations like “major sixth”) count both the first and the last note, and then field specific questions from students if necessary. If between roughly 35% and 70% of student responses on a clicker question are correct, I direct students to discuss the question in small groups, as per standard ConcepTest methodology (see par. 4.7ff).

[8.10] By the end of a single 80-minute peer instruction session on intervals, student responses had risen from less than 50% accuracy on the first few interval analyses to more than 90% accuracy by the end of class. Students still struggled with speed of interval analysis for some time afterward, but only a handful of students struggled with accuracy on subsequent assessments. I can report that in my class, the impact of these peer-instruction techniques on student learning, which has been established in studies on inverted classes in the sciences, seems to carry over into inverted music theory courses. The class time and grading time I saved through the use of these techniques allowed me to intervene more with those students who needed additional help.

The Inverted Class and Universal Design for Learning (UDL)

[8.11] In addition to decreasing my grading time and helping students manage their progression towards mastery, the combination of an inquiry-based inverted class with standards-based grading brought my class much more in line with the Three Principles of Universal Design for Learning (UDL) advocated by the National Center on Universal Design for Learning. UDL seeks to create a class environment where students with different cultural and educational backgrounds, different strengths and weaknesses in relation to the course material, and diagnosed or undiagnosed learning disabilities all have a reasonable chance at success without relying on institutional accommodations like extra time or a distraction-free environment for testing. Though I was unaware of UDL when I began flipping my class, I discovered that the inverted class and a flexible standards-based system of assessment enabled students with a wide variety of learning differences to succeed in my classes. The inverted class and flexible SBG both incorporate the three principles of UDL:(13)

- Provide multiple means of representation.

- Provide multiple means of action and expression.

- Provide multiple means of engagement.

[8.12] The inquiry-based study of species counterpoint I described in Shaffer and Hughes 2013 provides an example of these principles at work in an inverted class. Students first performed and analyzed exemplars of a species while collaborating on a class “notebook.” Then they read a condensed explanation of the species and watched a video demonstrating my compositional process. Finally they worked in pairs to compose and perform their own idiomatic exercises. This process exemplified the first two UDL principles listed above. Musical concepts are represented in multiple models that students read, watch, sing, and play. Composing, performing, listening, and notating provide students with multiple means of action and expression.

[8.13] The third principle was not embodied well in that particular course unit, however. Though the students were provided multiple means of engagement with the material, I required most of these modes as part of the assessment rather than giving the students options. Had I focused more on musical concepts when crafting the assessment standards and allowed more flexibility in the tasks I required for them to demonstrate mastery, students could have taken fuller advantage of the means of engagement best suited to their learning. But generally speaking, building flexibility into the inverted course and the standards-based assessments—for example, allowing different students to use different genres of music, instruments, notation technologies (software vs. pencil-and-paper), and assess at different times or in a different sequence of course topics—can help a greater diversity of students succeed without overburdening the instructor, TAs, or tutors.

[8.14] As Anna Gawboy wrote in her discussion of the music-theory sequence at Ohio State University, there are as many types of class activity as there are class meetings. I have provided some examples that worked well for my classes at Charleston Southern, which can serve as a basis for other instructors looking to incorporate SBG, JiTT, and/or the inverted class model into small, keyboard-based classes in music theory or integrated musicianship. By inverting the musicianship class and moving informational instruction and basic assessment to online and other out-of-class activities, much of class time can be devoted to projects that involve student collaboration, composition, aural analysis, and performance. By employing some of the specific standards-based assessment methods I have presented, grading can be replaced by real-time, in-person feedback. These tasks and feedback method are often engaging and enjoyable for the students, lead to longer-term retention of content as well as practical and transferable skills, lower the pressure on students while maintaining high standards of mastery, accommodate a wider variety of students and learning differences, and they are incredibly enjoyable for me as the instructor—especially when they aren’t followed by hours and hours of grading. Once the initial course materials are created or selected, class becomes much more like a performance or composition studio: the instructor ceases to be the fount of information and instead becomes the expert who observes student progress and peer instruction, and directs that progress through feedback and specific encouragement.

Feasibility and Pacing in Adopting the Hacks; or, A Low-Stress Path to Incorporating these Tools (Philip Duker)

[9.1] The department of music at the University of Delaware serves mostly music education and performance majors, with a smaller group of students majoring in composition, theory, music history and literature, as well as music management. I began teaching at UD in the Fall of 2009 and began using just-in-time teaching during the second semester I was there. I have incorporated the other two hacks since then and have found that my classes have been more effective as a result. While the previous testimonials have given nice examples of how the three hacks can work in the classroom, I hope to add to this and also provide some advice for instructors considering adopting them. Though we have been emphasizing the way in which these hacks work well together, they can be overwhelming when considered as a whole package. Like the adoption of any new technology, many consider it best not to bundle multiple changes together at the same time (Cruickshank 2009). Rather, it is often easier to work incrementally, pulling in one or at most two novel techniques per term so that one can incorporate and assimilate the new workflow.

Just-in-Time Teaching (JiTT)

[9.2] Probably the least radical and most easily adopted technique of the three is just-in-time teaching (JiTT), as it does not need to take a significant amount of your time when compared with hand-grading student work. I began using JiTT in the spring of 2010 for the last semester of our theory core sequence, and have used it every semester since. I became interested in online quizzes because they would return results faster and save time through automated grading, but have since found that there are much better reasons for using them. Even if worth very little in terms of a grade, the quizzes leverage engagement with pieces before class meetings, leading to more fruitful discussions. As an added bonus, my students were happy taking quizzes since it gave them quick feedback about how they were doing in the class.

[9.3] While online quizzes provided great incentive for my students to practice outside of class (or even just prepare pieces for class discussion), using the information from the quizzes to impact class meetings—i.e., making them JiTT assignments—allowed these quizzes to be used in a much more powerful way. Seeing the overall results from a quiz helped me understand which concepts gave students trouble, and I could then change my lesson plan accordingly. After recording the results from the students’ first attempt, I republished the quizzes as practice assessments that students could take unlimited times as a study tool. Of the three hacks, I have found that JiTT was the one most appreciated by the students, even begrudgingly so in some cases. On my course evaluations, one student wrote: “The quizzes, as annoying as they were, were actually a big help.”

[9.4] In the time I have been using JiTT, I have made some small adjustments, but what has evolved the most is how the quizzes factor into final course grades. When I first began using JiTT quizzes, I weighted them as much as full assignments. Now I grade them much more generously and count them as a small portion of the grade. I want students to see quizzes as low-stakes practice with the material, and to understand that I use them much more for gathering information than giving grades. Although I began by making quiz results available immediately after the students took a quiz, I have since started releasing the grades and answers after the quiz is due to discourage “cheating.” Despite the quizzes being worth very little in terms of their grade, I would hear about students taking the quiz and then letting their peers know how they did on certain questions (which students can often figure out even if the results only show their total score). For most students, the delay in seeing their quiz result is often less than 24 hours, which still allows them to relate the work they did on the quiz to the score they receive.